Configuring AI

Set up and manage connections to AI providers, including cloud-based APIs and local models.

Octarine supports multiple AI providers—choose the models that fit your workflow. Use cloud-based services like OpenAI and Anthropic, or run everything locally with Ollama.

AI features require a Pro License.

Setting Up Providers

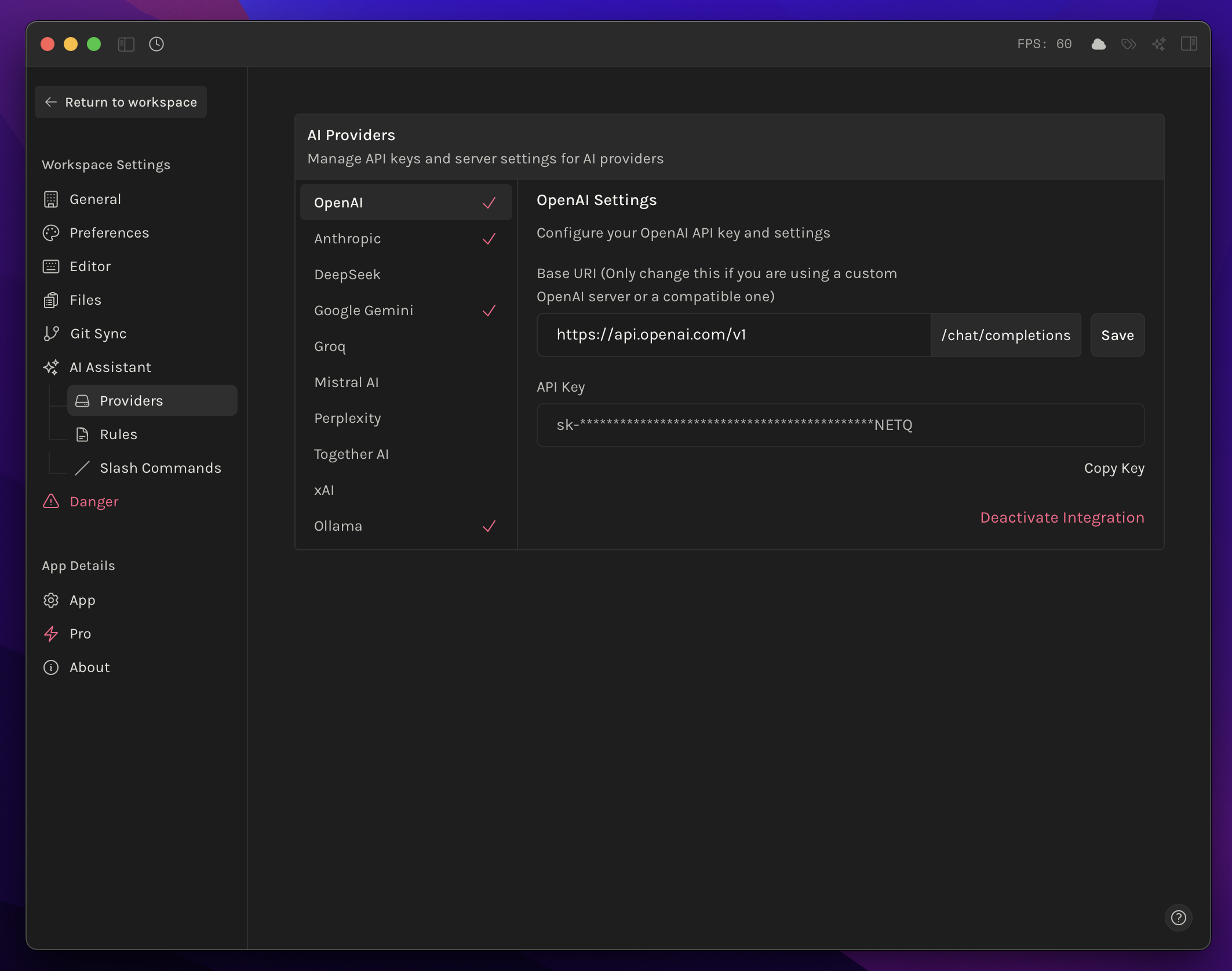

Go to Settings > AI > Providers to configure your AI connections. You'll see a list of supported providers:

- Cloud-based (OpenAI, Anthropic, etc.) — Enter your API key from the provider's console.

- Local (Ollama) — No credentials required.

Octarine validates API keys automatically and displays a checkmark when connected. Enable multiple providers simultaneously and switch between them at any time.

Selecting Models

After configuring providers, available models appear in a dropdown selector grouped by provider. Each model displays its context window size and key features.

Key behaviors:

- Switch models instantly, even mid-workflow

- Your last selected model is remembered across sessions

- Access the model selector from the AI panel or editor toolbar when AI features are active

Local Models

Run AI models on your own machine for privacy and offline use. Check out our dedicated guides:

- Working with Ollama — Run models locally using Ollama

- Working with LM Studio — Use LM Studio for local AI models

Managing Multiple Providers

With multiple providers configured, all models appear in a unified list. Switch between cloud and local models without reconfiguration.

Security and maintenance:

- API keys are stored securely on your device

- Update or remove keys anytime in Settings

- Clear error messages appear if a provider goes offline or credentials expire