Working with LM Studio

Learn how to connect your local LMStudio to Octarine to use Writing Assistant and Ask Octarine

Want to run AI models on your computer without relying on the cloud? LM Studio lets you do exactly that. This guide walks you through installing it, downloading a model, and connecting it to Octarine so you can use the Writing Assistant and Ask Octarine features completely locally.

Prerequisites

You'll need macOS or Windows (Linux support is experimental). Make sure you have enough CPU, RAM, and storage—especially if you're planning to run larger models.

Step 1: Install LM Studio

Head to the LM Studio website and download the installer for your operating system.

On macOS, open the .dmg file and follow the prompts. On Windows, run the .exe and complete the installation wizard. If you're on Linux, check the official website for the latest experimental instructions.

Step 2: Download a Model

Once you've installed LM Studio, launch it from your applications folder or start menu.

You'll see a built-in model library. Browse it or use the search bar to find a model—popular options include Llama 2, Mistral, and Phi-3. When you find one you like, click Download next to it and wait for it to finish.

Step 3: Start the Local Server

LM Studio can run a local API server that Octarine connects to.

Go to the API tab (usually on the sidebar) and click Start Server. By default, it runs on http://localhost:1234. Make a note of the URL—you'll need it in the next step.

Step 4: Connect LM Studio to Octarine

Now you're ready to hook everything up.

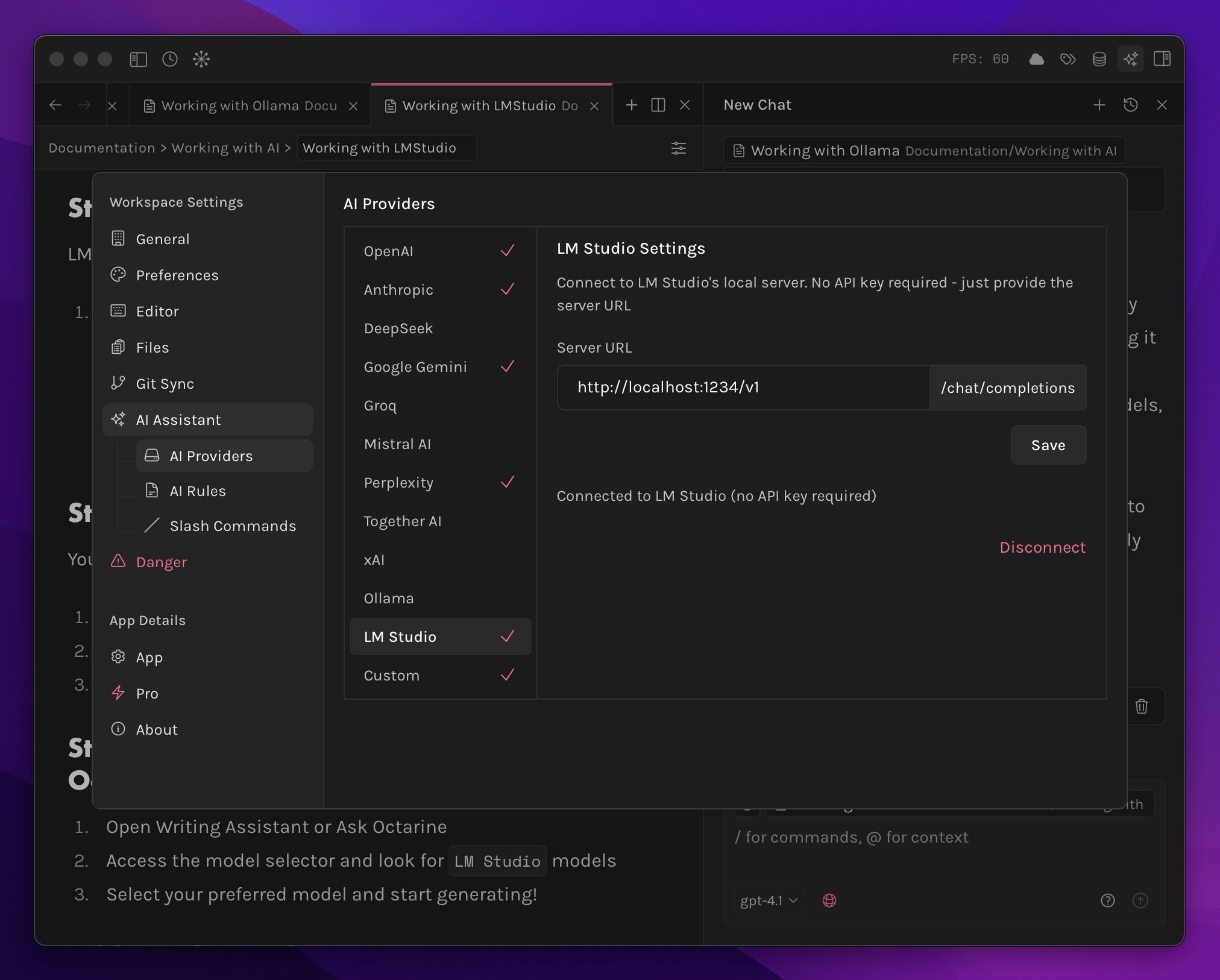

In Octarine, go to Settings → AI Assistant → AI Providers and click on LM Studio. Enter the local server URL from the previous step (usually http://localhost:1234) and press Save.

Step 5: Start Using Your Local Models

Open the Writing Assistant or Ask Octarine and click the model selector. You should see your LM Studio models listed there. Select the one you want and start generating!

A Few Things to Know

The LM Studio API only accepts requests from localhost by default, so it's safe for local use. If you want to manage your models—delete them, update them, whatever—just use the LM Studio interface. And when you're done, you can stop the server by going back to the API tab and clicking Stop Server, or just close LM Studio altogether.